Retrieval-Augmented Generation or RAG is important because it helps LLMs give more accurate answers by also including content (or context) from your private data. See this post on how you can implement RAG quickly and securely.

With RAG, your private data is stored as an embedding in a vector database. Depending on the user query, data is retrieved from this database using a type of search called “semantic search.” Semantic search is cool because it understands questions more like a human does. i.e “cost” and “price” mean the same thing.

But, it’s not perfect. It sometimes misses relevant keywords. In some cases the precise keyword must be included in the query e.g. brand or website name to get correct results.

Hybrid Search

To improve this, Amazon Bedrock introduced a new feature called “hybrid search.” Hybrid search combines semantic search with the old-school keyword search. It uses the strengths of both to give you better results. Here is an example:

what is the cost of the cycle of the brand <brand> on <website>?In this query a semantic search for “cost” and a keyword search for “brand” and “website” will yield a better search result or context.

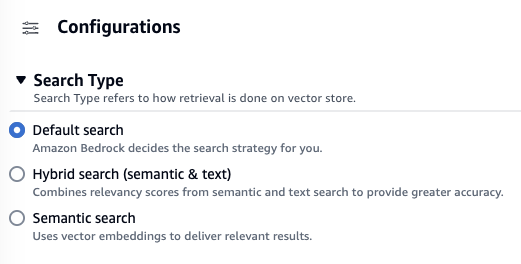

The Bedrock AWS console currently offers 3 options for you to query your vector database.

👐

Thats it, with hybrid search you are going to get more accurate answers from your foundation models (FM). Note that semantic search is more precise when the domain is narrow and there is little room for misinterpretation.

👉 For more details, you can visit the full blog post here.

👉 Want to try RAG on Bedrock? Here a github repo to help you get started.

👉 Here is a superb video on the topic as well